Mathematical Proof: You Should Just Start Reading in Your Target Language

The goal is to find a function to approximate the ease of reading as a function as progress through a book with the assumption that nothing is known at the beginning of reading.

To create a model to simulate one's mind when reading a book, let's first set up some variables.

Let \(n=\)the number of unique words in the book

Let \(L=\)the total number of words in the book

We will consider the list of unique words in the book to be ordered from most frequent to least frequent and numbered 1 to \(n\)

Let \(p(w)\) be a function that gets the frequency of the \(w\)th word in the list

According Zipf's Law, the frequency of each word will be equal to \(p(w)=\frac{f_1}{w}\) where \(f_1\) is the frequency of the most frequent word (\(w=1\)).

The sum of the frequencies of the words, \(\sum_{w=1}^{n}p(w)\), will be equal to \(100\%\) simply because the chance of a given word being any word in the list of all possible words is \(100\%\).

Therefore, to find \(f_1\) so that we can fully define \(p(w)\), we need to find what value of \(f_1\) will make the equality \(\sum_{w=1}^{n}p(w)=\sum_{w=1}^{n}\frac{f_1}{w}=100\%\) true.

\(\sum_{w=1}^{n}\frac{f_1}{w}=100\%\)

is equivalent to

\(f_1*\sum_{w=1}^{n}\frac{1}{w}=100*\frac{1}{100}\)

because multiplying each term of the sum by \(f_1\) is the same as multiplying the whole sum by \(f_1\) and \(\%=\frac{1}{100}\) by definition. Simplifying the right side and then dividing each side by \(\sum_{w=1}^{n}\frac{1}{w}\) to isolate \(f_1\) then yields:

\(f_1=\frac{1}{\sum_{w=1}^{n}\frac{1}{w}}\)

This cannot be simplified any further because \(\sum_{w=1}^{n}\frac{1}{w}\) is the partial sum of the harmonic series which does not have a simpler solution, but you can use a calculator to calculate it. It can also be approximated by \(\frac{1}{ln(n)}\) (a slight overestimate).

Continuing expanding on the model:

Let \(r=\) the chance of learning a word after seeing it

Let \(k(w,i)=\)the probability of remembering the \(w\)th most frequent word after reading \(i\) words

by the opposite:

\(k(w,i)=100\%-\)the probability of not remembering the \(w\)th most frequent word after reading \(i\) words

from \(i\) repeated independent events (not really independent, but it's only a slight underestimate):

\(k(w,i)=100\%-(\)the probability of not learning the \(w\)th most frequent word after reading a word\()^i\)

by the opposite:

\(k(w,i)=100\%-(100\%-\)the probability of learning the \(w\)th most frequent word after reading a word\()^i\)

\(k(w,i)=100\%-(100\%-\)the probability of reading the \(w\)th most frequent word\(*\)the probability of learning a word after seeing it\()^i\)

\(k(w,i)=100\%-(100\%-p(w)*r)^i\)

converting from percents \(100\%=1\):

\(k(w,i)=1-(1-p(w)*r)^i\)

This completes the definition of \(k(w,i)\) because \(r\) is a variable we manually manipulate, \(p(w)\) is already defined, and \(w\) and \(i\) are inputs.

Let \(e_w(i)=\)the probability of remembering the \(i\)th word read

\(e_w(i)=\)the sum of the probabilities of remembering each word after reading \(i\) words weighted by the probability of seeing each word

\(e_w(i)=\sum_{w=1}^{n}(\)the probability of remembering the \(w\)th most frequent word\(*\)the probability of seeing the \(w\)th most frequent word\()\)

\(e_w(i)=\sum_{w=1}^{n}(k(w,i)*p(w))\)

This completes the definition of \(e_w(i)\).

Let \(a=\)the average sentence length

Let \(e_s(i)=\)the probability of remembering all the words in the next sentence after reading \(i\) words

\(e_s(i)=\)the probability of remembering the next \(a\) words after reading \(i\) words

by repeated \(a\) repeated independent events (assuming nothing was learned from the next \(a\) words):

\(e_s(i)=(\)the probability of remembering a word after reading \(i\) words\()^a\)

\(e_s(i)=(e_w(i))^a\)

Let \(E_w(t)=\)the chance of remembering the next word after reading \(t\) percent of the book

\(E_w(t)=e_w(\)how many words read after reading \(t\) percent of the book\()\)

\(E_w(t)=e_w(\)the number of words in the book\(*\)the percent of words read\(*\frac{1}{100\%})\)

\(E_w(t)=e_w(\frac{L*t}{100\%})\)

Let \(E_s(t)=\)the chance of remembering the next sentence after reading \(t\) percent of the book

\(E_s(t)=e_s(\)how many words read after reading \(t\) percent of the book\()\)

\(E_w(t)=e_s(\)the number of words in the book\(*\)the percent of words read\(*\frac{1}{100\%})\)

\(E_w(t)=e_s(\frac{L*t}{100\%})\)

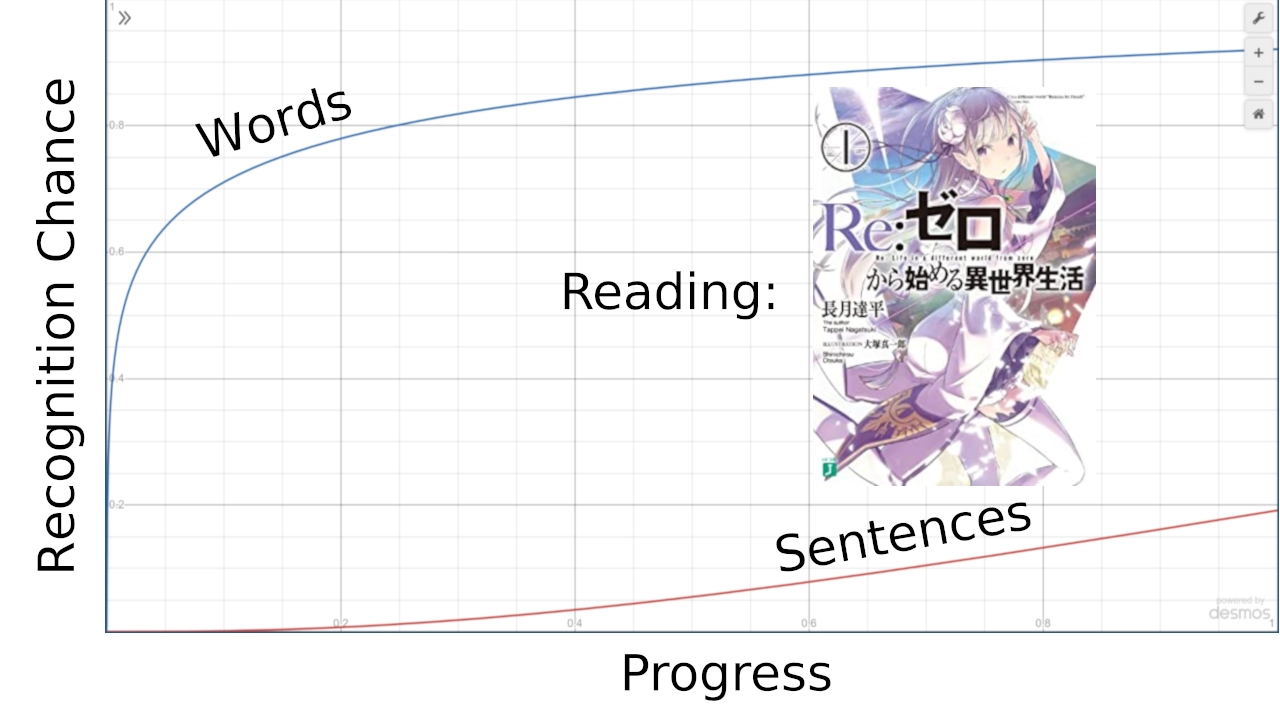

This completes the model as we have found a few functions \((e_w(i), e_s(i), E_w(t), E_s(t))\) to estimate the ease of reading as a function of progress. Below is an implementation of the model you can manipulate (note: not all the input variables will be used depending on the calculation).

Based on the model, for me to have a 50% of remembering the next word when reading the first volume of Re:Zero, which as 7519 unique words and 52997 words total (according jpdb.io: https://jpdb.io/novel/1611/re-zero-starting-life-in-another-world), assuming I knew 0 Japanese before reading and that I had a 50% chance of learning a word each time I saw it (probably assisted by Anki), I would only have to read about 1.27% of the book (or about 4 pages) to have about a 50% chance knowing the next word, which is much less than I thought.

By using Yomitan for reading on the web, Takoboto for dictionary lookup on mobile (and creating Anki cards), and Google's Japanese handwriting OCR, I have reduced the friction of looking up new words a lot. So, that combined with the promising predictions has made pushing through reading difficult material a lot more encouraging.

Last updated: Mon, 01 Apr 2024 16:02:40 EDT

Hickey, C. L. (2024, March 25). Mathematical Proof: You Should Just Start Reading in Your Target Language. Clayton Hickey. https://claytonhickey.me/blog/mathematical-proof-you-should-just-start-reading-in-your-target-language/.